微调用于AIOPS场景的大模型(一)--垂类数据采集

模型仅具备各领域的通用知识,对于垂类仍有进步空间,这也是医疗、政务类模型出现的原因。我们在尝试AIagent时发现模型并不够聪明,对于安装性能分析工具,vim前后台等问题无法进展到下一步,详见 Autogen 运维排错实践-复杂案例。此次尝试使用偏运维领域的ServerFault,爬取经过人工审核的有效答案来微调模型,观察效果。简言之,教模型所不擅长

步骤

- 爬取ServerFault,筛选有效问答

- 微调模型

- AutoGen配置微调后模型

先看效果,根据采集到的数据,统计出ServerFault热门词云

爬虫

筛选逻辑,根据Active状态&前500页&作者vote过的问题,分别记录问题链接、标题、内容、发布时间、更新时间、被查看总数、投票总数;答案内容、得分9个字段,两张表通过外键关联

CREATETABLE Posts (

PostID INTEGERPRIMARYKEY,

PostLink TEXTNOTNULL,

Title TEXTNOTNULL,

PostContent TEXTNOTNULL,

PostTime TEXTNOTNULL,-- ISO8601 strings ("YYYY-MM-DD HH:MM:SS.SSS")

ModifyTime TEXTNOTNULL,

ViewCount INTEGERNOTNULL,

VoteCount INTEGERNOTNULL

);

CREATETABLE Answers (

AnswerID INTEGERPRIMARYKEY,

PostID INTEGER,

AnswerContent TEXTNOTNULL,

VoteCount INTEGERNOTNULL,

FOREIGNKEY(PostID)REFERENCES Posts(PostID)

);NO;经过控制爬虫速率,切换代理地址,共采集问题、答案数

| 数量 | |

|---|---|

| Posts | 6681 |

| Answers | 16253 |

VoteCount分布

| 0-100 | 101-200 | 201-300 | 301-400 | 401-500 | >500 | |

|---|---|---|---|---|---|---|

| Posts | 6278 | 85 | 32 | 13 | 1 | 5 |

| Answers | 15643 | 150 | 31 | 16 | 7 | 8 |

问题Top10

| 问题 | VoteCount |

|---|---|

| How to determine if a bash variable is empty? | 1014 |

| What exactly do the colors in htop status bars mean? | 598 |

| How do I tell Git for Windows where to find my private RSA key? | 558 |

| "In Nginx, how can I rewrite all http requests to https while maintaining sub-domain?" | 554 |

| mysqldump throws: Unknown table 'COLUMN_STATISTICS' in information_schema (1109) | 503 |

| Displaying a remote SSL certificate details using CLI tools | 432 |

| "Why is my crontab not working, and how can I troubleshoot it?" | 395 |

| Showing total progress in rsync: is it possible? | 384 |

| How to set environment variable in systemd service? | 379 |

| How do you search for backdoors from the previous IT person? | 378 |

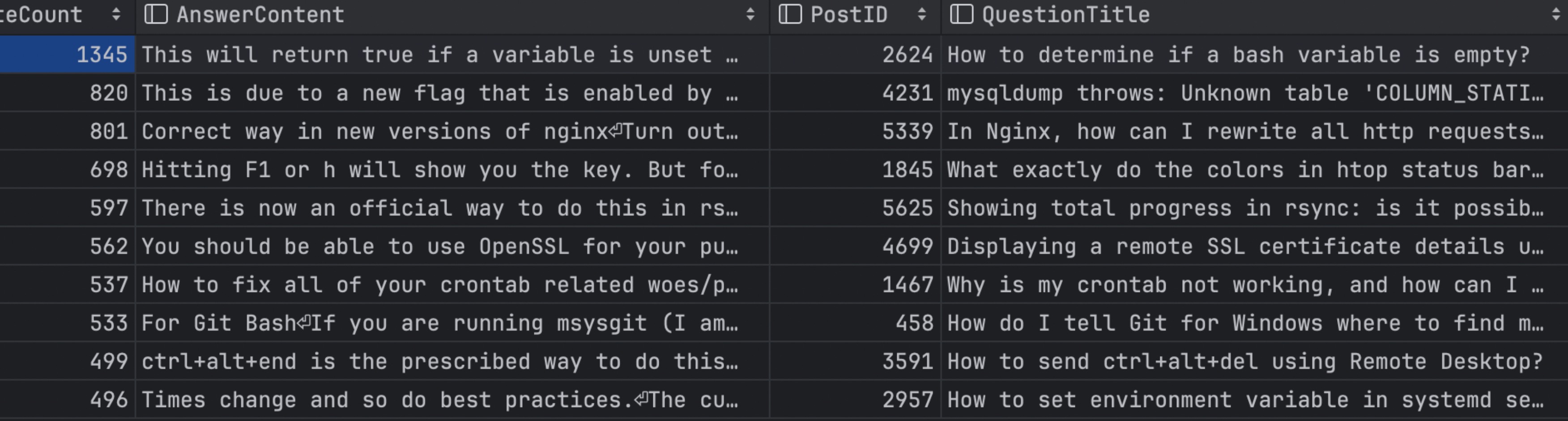

答案Top10

被查看次数Top10

| 问题 | 被查看数 |

|---|---|

| How to determine if a bash variable is empty? | 1431048 |

| heck if port is open or closed on a Linux server? | 1408291 |

| Resolve host name from IP address | 1214006 |

| How do I force sync the time on Windows Workstation or Server? | 1208122 |

| How do I tell Git for Windows where to find my private RSA key? | 1152977 |

| How to check sshd log? | 1021535 |

| What causes the 'Connection Refused' message? | 990564 |

| "Why is my crontab not working, and how can I troubleshoot it?" | 970860 |

| How to send ctrl+alt+del using Remote Desktop? | 907662 |

| How do I convert a .cer certificate to .pem? | 817360 |

”万众瞩目”问题,被查看数top10与问题top10的交集部分

| 问题 | 被查看数 | 投票数 | |

|---|---|---|---|

| How to determine if a bash variable is empty? | 1431048 | 1014 | 链接 |

| How do I tell Git for Windows where to find my private RSA key? | 1152977 | 558 | 链接 |

| Why is my crontab not working, and how can I troubleshoot it? | 970860 | 395 | 链接 |

代码部分

import requests

from bs4 import BeautifulSoup

import re

import time

import sqlite3

# 解析查看次数

def parse_view_count(view_count_title):

match = re.search(r'\d{1,3}(?:,\d{3})*', view_count_title)

if match:

view_count_str = match.group().replace(',', '')

return int(view_count_str)

return 0

# 获取答案详情

def get_answers_details(soup):

answers_details = []

answers_divs = soup.find_all("div", class_=re.compile("answer"))

for answer_div in answers_divs:

answer_id = answer_div.get('data-answerid')

vote_count = answer_div.get('data-score')

answer_content_div = answer_div.find("div", class_="s-prose js-post-body")

answer_content = answer_content_div.text.strip() if answer_content_div else "No answer content"

if answer_id and vote_count:

answers_details.append({

"AnswerID": answer_id,

"VoteCount": int(vote_count),

"AnswerContent": answer_content

})

return answers_details

# 获取问题详情

def get_question_detail(question_url):

response = requests.get(question_url)

if response.status_code != 200:

return "Failed to retrieve the question page"

soup = BeautifulSoup(response.text, "html.parser")

title = soup.find("a", class_="question-hyperlink").text.strip()

post_content = soup.find("div", class_="s-prose js-post-body").text.strip()

post_time = soup.find("time", itemprop="dateCreated")["datetime"]

try:

modify_time = soup.find("a", href="?lastactivity")["title"]

except TypeError:

modify_time = post_time

view_count_div = soup.find("div", title=lambda x: x and x.startswith("Viewed"))

view_count_title = view_count_div['title'] if view_count_div else 'Viewed 1 time'

view_count = parse_view_count(view_count_title)

vote_count = int(soup.find("div", class_="js-vote-count").get('data-value', 0))

answers_details = get_answers_details(soup)

question_detail = {

"Title": title,

"PostContent": post_content,

"PostTime": post_time,

"ModifyTime": modify_time,

"ViewCount": view_count,

"VoteCount": vote_count,

"AnswersDetails": answers_details

}

return question_detail

# 获取问题URLs

def get_question_urls(page_url):

response = requests.get(page_url)

if response.status_code == 200:

soup = BeautifulSoup(response.text, "html.parser")

divs_with_accepted_answers = soup.find_all("div",

class_="s-post-summary--stats-item has-answers has-accepted-answer")

question_urls = []

for div in divs_with_accepted_answers:

question_id = div.find_parent("div", class_="s-post-summary js-post-summary")['data-post-id']

title_tag = div.find_parent("div", class_="s-post-summary js-post-summary").find("h3",

class_="s-post-summary--content-title").find(

"a")

if title_tag and 'href' in title_tag.attrs:

full_url = f"https://serverfault.com{title_tag.attrs['href']}"

question_urls.append(full_url)

return question_urls

else:

print("Failed to retrieve the webpage")

return []

# 插入帖子和答案到数据库

def insert_post_and_answers(conn, question_detail, post_link):

c = conn.cursor()

# 插入帖子详情

c.execute('''INSERT INTO Posts (PostLink, Title, PostContent, PostTime, ModifyTime, ViewCount, VoteCount)

VALUES (?, ?, ?, ?, ?, ?, ?)''',

(post_link, question_detail["Title"], question_detail["PostContent"],

question_detail["PostTime"], question_detail["ModifyTime"],

question_detail["ViewCount"], question_detail["VoteCount"]))

post_id = c.lastrowid

# 插入答案详情之前检查AnswerID是否存在

for answer in question_detail["AnswersDetails"]:

# 检查AnswerID是否存在

c.execute('''SELECT AnswerID FROM Answers WHERE AnswerID = ?''', (answer["AnswerID"],))

result = c.fetchone()

if result is None:

# AnswerID不存在,可以安全插入

c.execute('''INSERT INTO Answers (AnswerID, PostID, AnswerContent, VoteCount)

VALUES (?, ?, ?, ?)''',

(answer["AnswerID"], post_id, answer["AnswerContent"], answer["VoteCount"]))

else:

# AnswerID已存在,可选择跳过或更新

# 例如,这里我们选择跳过

print(f"Skipping insert for AnswerID {answer['AnswerID']} as it already exists.")

conn.commit()

# 数据库连接

conn = sqlite3.connect('spider')

base_url = "https://serverfault.com/questions?tab=active&page="

# 直接在这里设置你想爬取的起始页和结束页

start_page = 479 # 例如,从第10页开始

end_page = 500 # 例如,到第12页结束

for page_number in range(start_page, end_page + 1):

page_url = f"{base_url}{page_number}"

print(f"Fetching questions from: {page_url}")

question_urls = get_question_urls(page_url) # 确保这个函数已经被定义

for url in question_urls:

question_detail = get_question_detail(url) # 确保这个函数已经被定义

if isinstance(question_detail, dict): # 确保获取到了有效的问题详情

insert_post_and_answers(conn, question_detail, url) # 确保这个函数已经被定义

if page_number < end_page:

print("Waiting for 10 seconds before the next page...")

time.sleep(20) # 在请求之间等待30秒

# 关闭数据库连接

conn.close()SQL部分

SELECT Posts.*, Answers.*

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Posts.PostTime >= '2020-01-01 00:00:00' OR Posts.ModifyTime >= '2020-01-01 00:00:00';

SELECT Posts.*, Answers.*

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Posts.PostTime >= '2020-01-01 00:00:00' OR Posts.ModifyTime >= '2020-01-01 00:00:00'

ORDER BY Posts.ViewCount DESC;

SELECT 'Posts', COUNT(*) FROM Posts

UNION

SELECT 'Answers', COUNT(*) FROM Answers;

SELECT Posts.*, Answers.*

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Answers.AnswerID = 62338;

SELECT Posts.*, Answers.*

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Answers.PostID is null;

SELECT Posts.*, Answers.*

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE PostLink like '%254627%';

delete from posts where PostID in ( SELECT Posts.PostID

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Answers.PostID is null);

SELECT Posts.PostID

FROM Posts

LEFT JOIN Answers ON Posts.PostID = Answers.PostID

WHERE Answers.PostID is null;

SELECT *

FROM Answers

WHERE answerContent IS NULL;

-- 问题数

SELECT COUNT(*) AS QuestionsOver200Votes

FROM Posts

WHERE VoteCount > 500;

-- 答案数

SELECT COUNT(*) AS AnswersOver200Votes

FROM Answers

WHERE VoteCount > 500;

SELECT COUNT(*)

FROM Answers

WHERE VoteCount BETWEEN 401 AND 500;

SELECT PostID, Title, VoteCount, PostLink

FROM Posts

WHERE Posts.PostTime >= '2024-01-01 00:00:00'

ORDER BY VoteCount DESC

LIMIT 10;

SELECT

A.AnswerID,

A.VoteCount AS AnswerVoteCount,

A.AnswerContent,

P.PostID,

P.Title AS QuestionTitle,

P.VoteCount AS QuestionVoteCount

FROM Answers A

JOIN Posts P ON A.PostID = P.PostID

ORDER BY A.VoteCount DESC

LIMIT 10;

SELECT PostID, Title, ViewCount, PostLink

FROM Posts

ORDER BY ViewCount DESC

LIMIT 10;

SELECT P.PostID, P.Title, P.ViewCount, P.VoteCount, P.PostLink

FROM Posts P

WHERE P.PostID IN (

SELECT PostID

FROM Posts

ORDER BY ViewCount DESC

LIMIT 10

)

AND P.PostID IN (

SELECT PostID

FROM Posts

ORDER BY VoteCount DESC

LIMIT 10

)

ORDER BY P.ViewCount DESC, P.VoteCount DESC;