背景

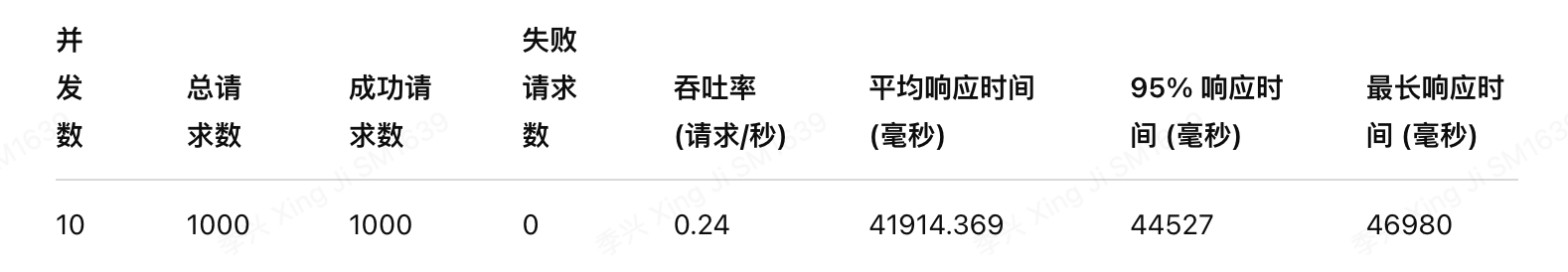

本地部署DeepSeek-Coder-V2-Lite-Instruct:14b。要求基础的高可用、监控、安全能力。ollama默认只能使用第一张显卡,多个模型同时调用会有bug(ollama ps显示100GPU,但使用CPU推理);无法高可用

具体方案

多GPU Ollama部署方案,通过系统服务化+负载均衡实现4块4090显卡的并行利用,边缘使用nginx负载均衡。

- 服务器名:AIGC-01

服务器硬件配置:

- CPU:AMD Ryzen Threadripper PRO 3955WX 16-Cores

- GPU:4 x NVIDIA RTX 4090

- MEM:128G

- 模型:DeepSeek-Coder-V2-Lite-Instruct:14b

ollama配置

# 备份ollama

cd /etc/systemd/system/

mv ollama.service ollama.service.bak

# 创建4个独立服务文件(每个GPU对应一个端口)

for i in {0..3}; do

sudo tee /etc/systemd/system/ollama-gpu${i}.service > /dev/null <<EOF

[Unit]

Description=Ollama Service (GPU $i)

[Service]

# 关键参数配置

Environment="CUDA_VISIBLE_DEVICES=$i"

Environment="OLLAMA_HOST=0.0.0.0:$((11434+i))"

ExecStart=/usr/local/bin/ollama serve

Restart=always

User=ollama

Group=ollama

[Install]

WantedBy=multi-user.target

EOF

done

# 重载服务配置

sudo systemctl daemon-reload

# 启动所有GPU实例

sudo systemctl start ollama-gpu{0..3}.service

# 设置开机自启

sudo systemctl enable ollama-gpu{0..3}.service

nginx配置

nginx 需要编译额外模块,用于健康检查

root@sunmax-AIGC-01:/etc/systemd/system# nginx -V

nginx version: nginx/1.24.0

built by gcc 9.4.0 (Ubuntu 9.4.0-1ubuntu1~20.04.2)

built with OpenSSL 1.1.1f 31 Mar 2020

TLS SNI support enabled

configure arguments: --with-http_ssl_module --add-module=./nginx_upstream_check_module

# /etc/nginx/sites-available/mga.maxiot-inc.com.conf

# 在http块中添加(如果放在server外请确保在http上下文中)

log_format detailed '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'RT=$request_time URT=$upstream_response_time '

'Host=$host Proto=$server_protocol '

'Header={\"X-Forwarded-For\": \"$proxy_add_x_forwarded_for\", '

'\"X-Real-IP\": \"$remote_addr\", '

'\"User-Agent\": \"$http_user_agent\", '

'\"Content-Type\": \"$content_type\"} '

'SSL=$ssl_protocol/$ssl_cipher '

'Upstream=$upstream_addr '

'Request_Length=$request_length '

'Request_Method=$request_method '

'Server_Name=$server_name '

'Server_Port=$server_port ';

upstream ollama_backend {

server 127.0.0.1:11436;

server 127.0.0.1:11437;

}

server {

listen 443 ssl;

server_name mga.maxiot-inc.com;

ssl_certificate /etc/nginx/ssl/maxiot-inc.com.pem;

ssl_certificate_key /etc/nginx/ssl/maxiot-inc.com.key;

# 访问日志

access_log /var/log/nginx/mga_maxiot_inc_com_access.log detailed;

# 错误日志

error_log /var/log/nginx/mga_maxiot_inc_com_error.log;

# 负载均衡设置,指向 ollama_backend

location / {

proxy_pass http://ollama_backend; # 会在两个服务器之间轮询

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}