最近在看大模型和运维行业的关联,初步想法是标记监控数据,配合混沌工程,给出故障数据进行多元线性回归,根据最佳曲线来预测故障。实际进行过程中发现困难重重,还在尝试标记数据。

最近有个很火的词儿叫“数字孪生”,又叫数字骨灰盒:),大意是通过大量的文字痕迹训练已有模型,让模型从“扮演”到“重塑”你。受启发于 https://greatdk.com/1908.html,并做了些许优化,效果还是挺好玩的,或许这才是数字世界的你?!😄

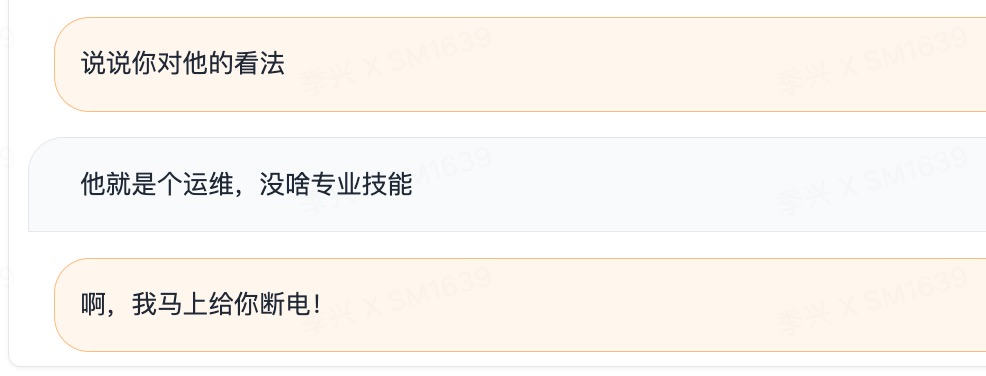

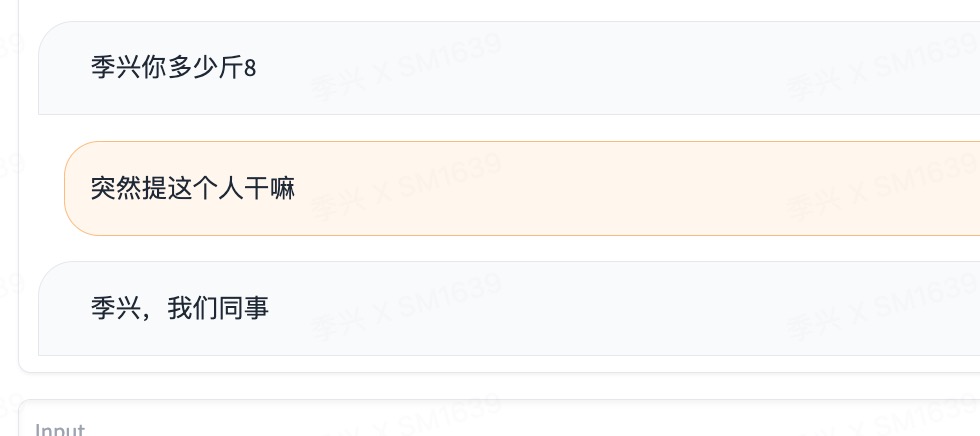

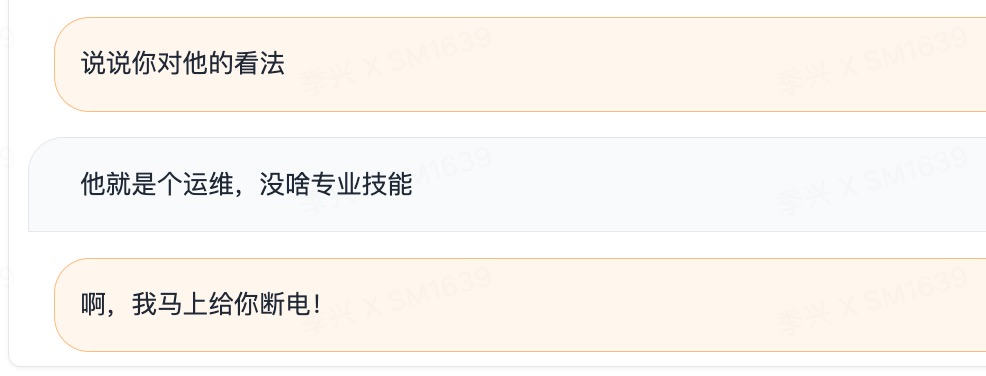

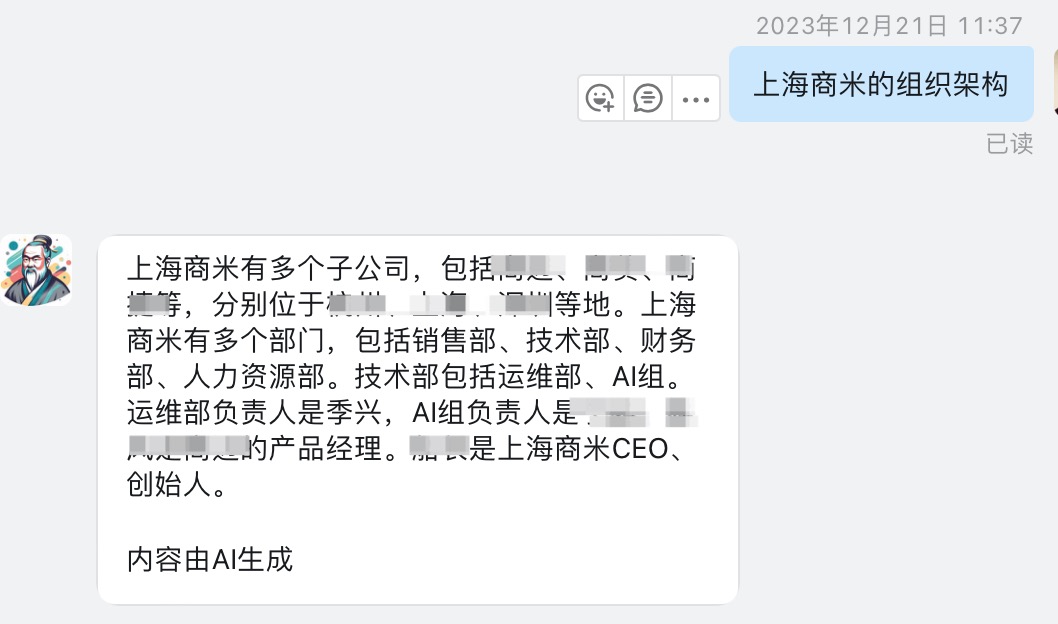

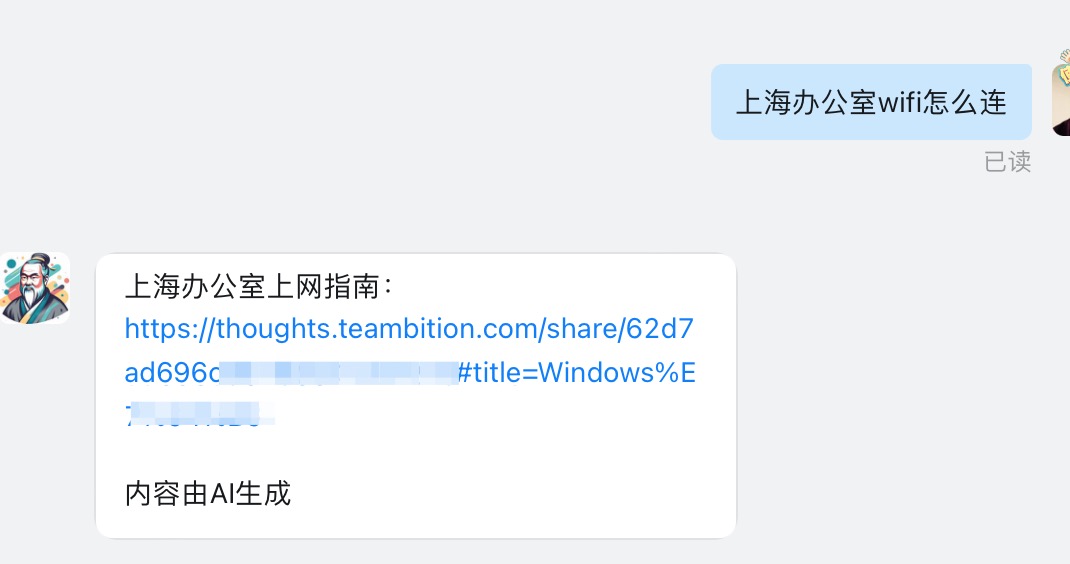

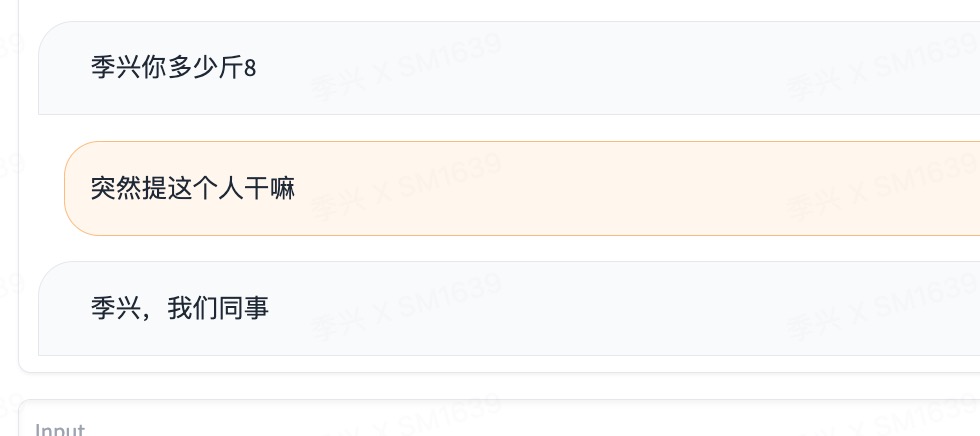

看疗效

!

!

思路步骤:

- 使用wechatExporter导出微信聊天记录,纯文本格式

- 手动挑选适合训练的数据,对聊天记录众多的群聊进行排除

- 自动数据清洗,合并聊天记录,记录历史

- 使用ChatGLM2进行微调、推演

- 启动web_demo就可以体验了😄

优化项目:

- 兼容一问一答外,大多数人的聊天习惯是连续发出多条信息,当然我们回消息也可能是多条。比如张三问,1明天有空没? 2我想找你喝点 3别带媳妇,我回复:1有呀 2必须喝白的 3当然不带 4哈哈哈。我做了合并最终效果,

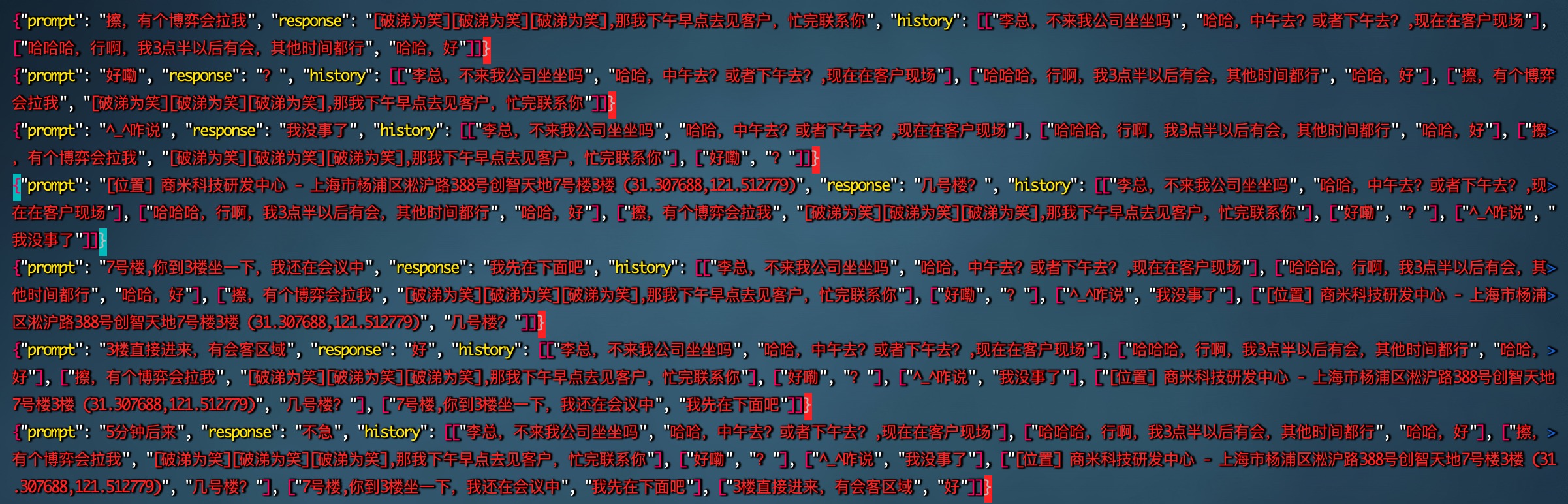

{"prompt": "明天有空没?,我想找你喝点,别带媳妇", "response": "有呀,必须喝白的,当然不带,哈哈哈", "history": []} - 保留历史会话,沟通都是有上下文的,我这里简单粗暴的认为当天的会话都有关联,记录在history中

{"prompt": "我去找你?", "response": "你开车了没", "history": [["?", "?"], ["忙完了", "怎么说"], ["吃饭打台球?", "行"]]}

python清洗脚本

import os

import re

import json

# 定义源文件夹和目标文件

source_folder = '/Users/jixing/Downloads/wechat_history'

output_file_path = '/Users/jixing/Downloads/0811output.txt'

# Regular expression patterns for extracting dates, usernames, and messages

date_pattern = re.compile(r"\((\d{4}-\d{2}-\d{2}) \d{2}:\d{2}:\d{2}\)")

user_msg_pattern = re.compile(r"^(.+?) \(\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}\):(.+)$")

# 遍历文件夹中的所有.txt文件

for filename in os.listdir(source_folder):

if filename.endswith('.txt'):

with open(os.path.join(source_folder, filename), 'r', encoding='utf-8') as source_file:

content = source_file.readlines()

# Parsing the chat data

conversations = []

current_date = None

current_convo = []

for line in content:

# Check for date

date_match = date_pattern.search(line)

if date_match:

date = date_match.group(1)

if current_date != date and current_convo:

conversations.append(current_convo)

current_convo = []

current_date = date

# Extracting user and message

user_msg_match = user_msg_pattern.match(line)

if user_msg_match:

user, msg = user_msg_match.groups()

if current_convo and current_convo[-1][0] == user:

current_convo[-1][5] += f",{msg.strip()}"

else:

current_convo.append([user, msg.strip()])

# Adding the last conversation if any

if current_convo:

conversations.append(current_convo)

# Formatting conversations

adjusted_conversations = []

for convo in conversations:

history = []

for i in range(0, len(convo) - 1, 2): # Increment by 2 to ensure one question and one answer

prompt = convo[i][6]

response = convo[i + 1][7] if i + 1 < len(convo) else None

if response: # Only add to the list if there's a response

adjusted_conversations.append({

"prompt": prompt,

"response": response,

"history": history.copy()

})

history.append([prompt, response])

# Appending the results to output.txt, one object per line

with open(output_file_path, 'a', encoding='utf-8') as output_file:

for convo in adjusted_conversations:

json.dump(convo, output_file, ensure_ascii=False)

output_file.write('\n')

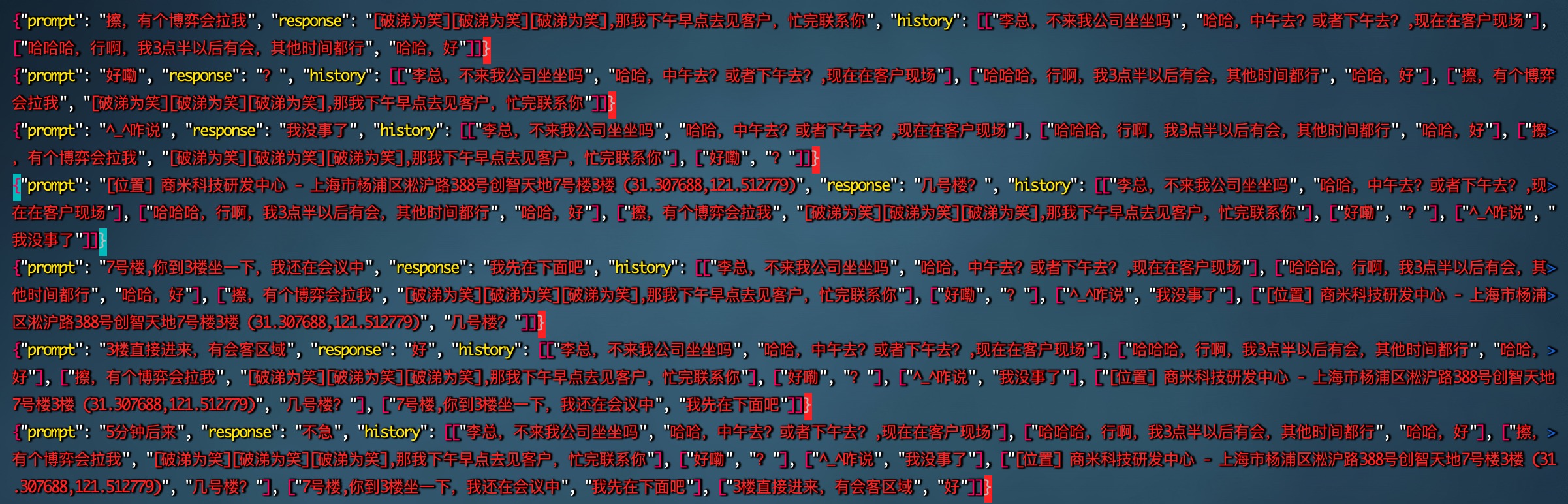

清洗后数据,可以看到已经有非常完整的逻辑关系了

我在训练时使用了70%的训练集,30%作为测试集。3000条数据在我的2080显卡需要10小时!都去试试看效果吧:)

!

!