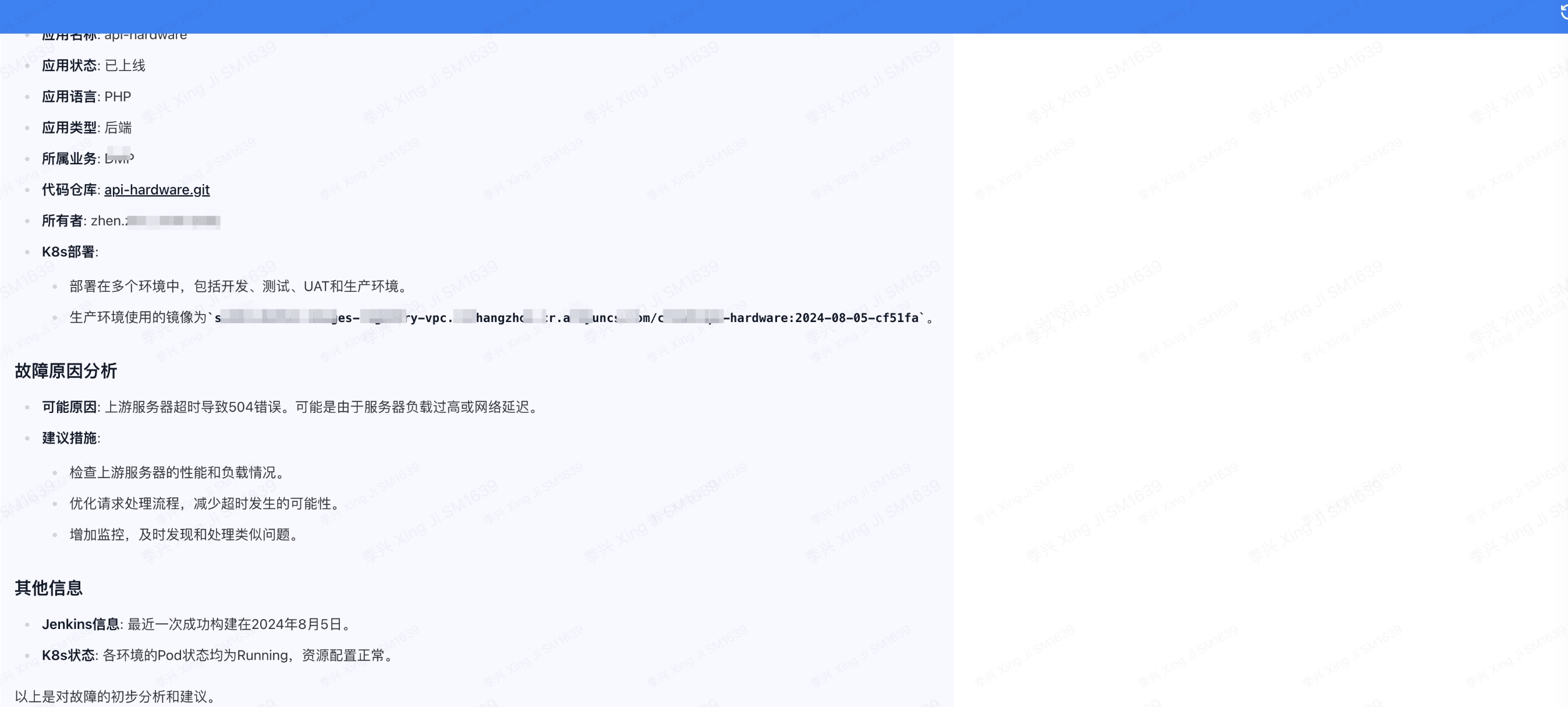

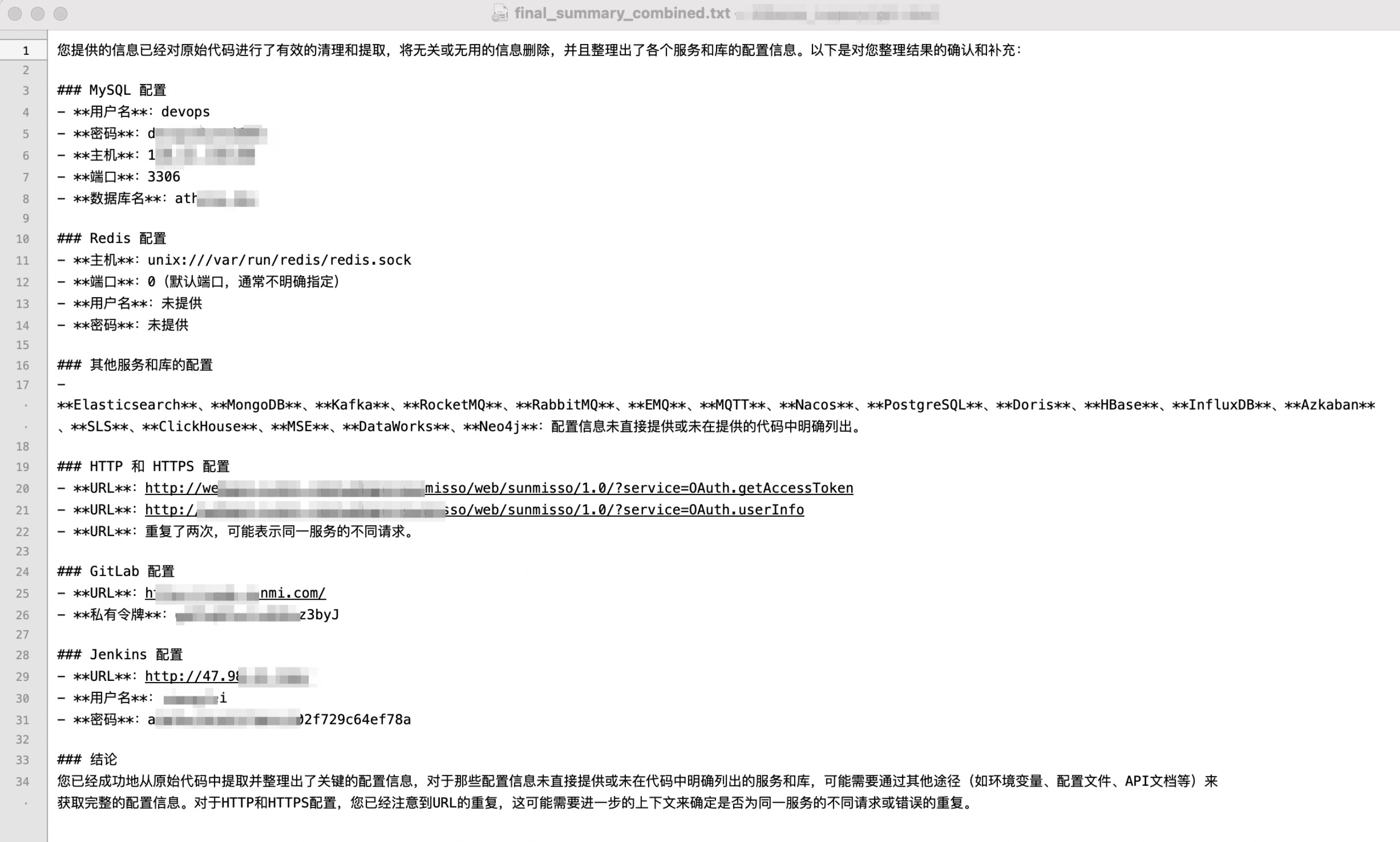

最近在整理CMDB信息,以Jenkins为中枢,统计、梳理代码仓库位置、发布位置。形成以应用为中心,串连资源、管理者。

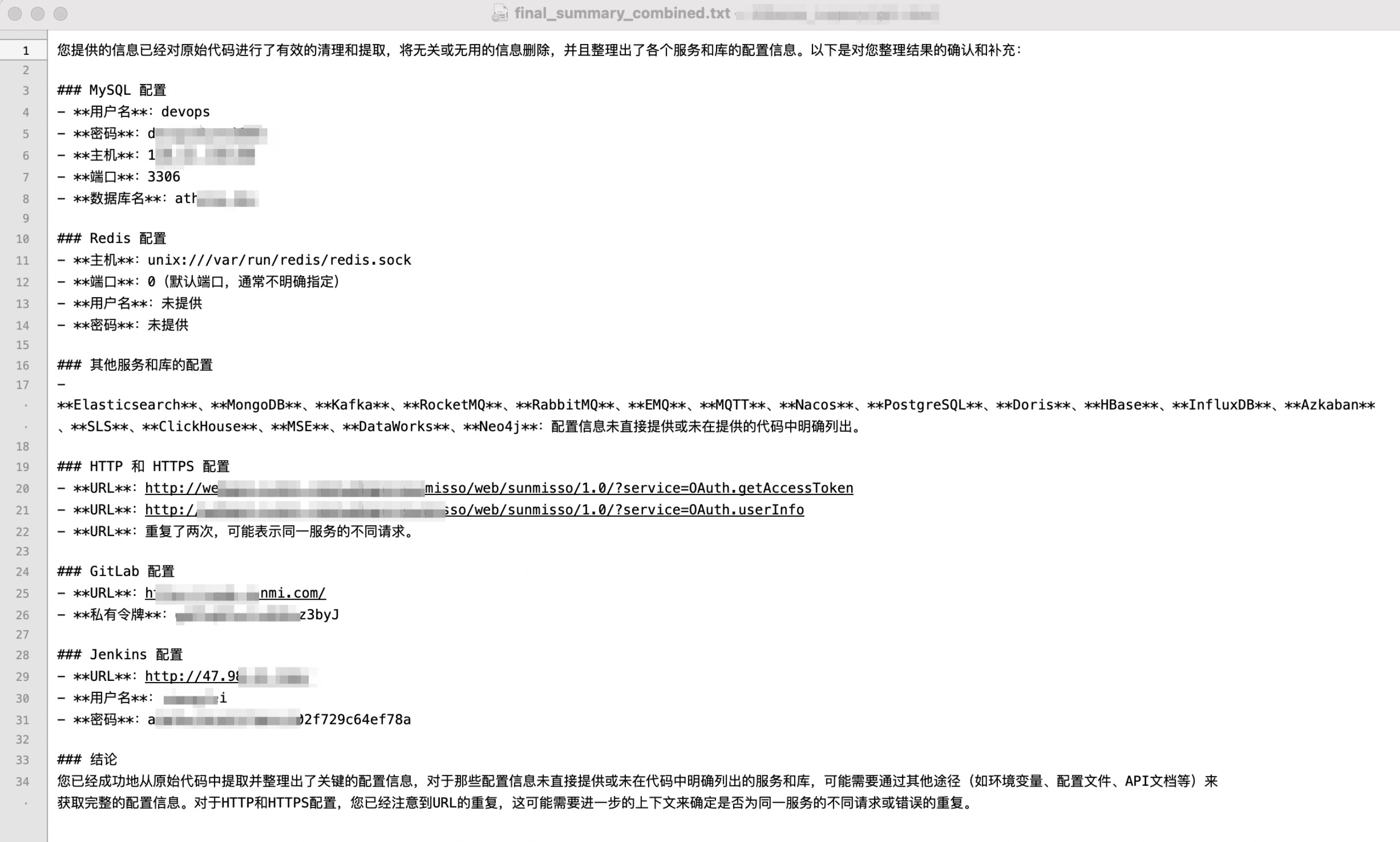

首先需要统计代码中涉及的配置文件信息,比如Mysql/Redis/Elasticsearch/MongoDB/Kafka/RocketMQ/MQTT/Doris/HBase/InfLuxDB/http等

意义:

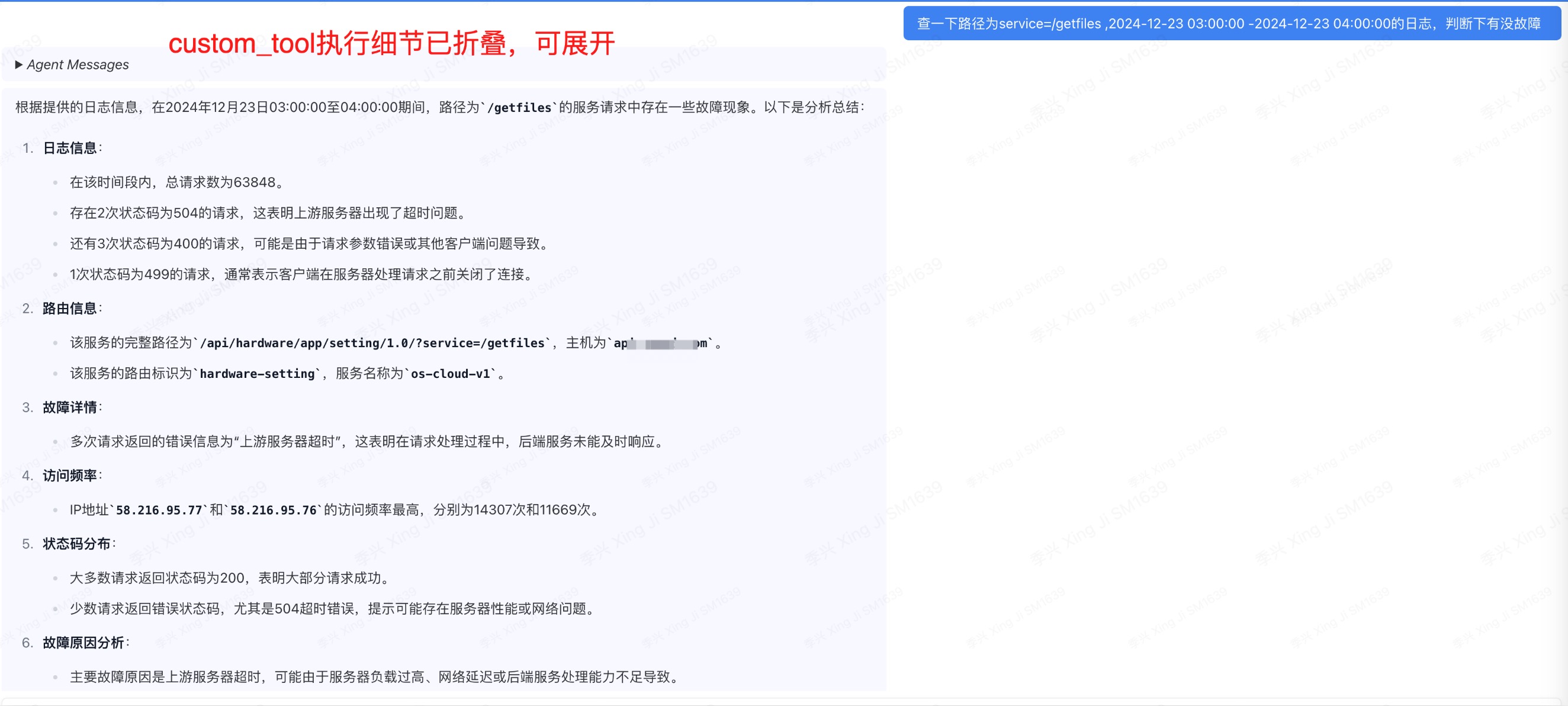

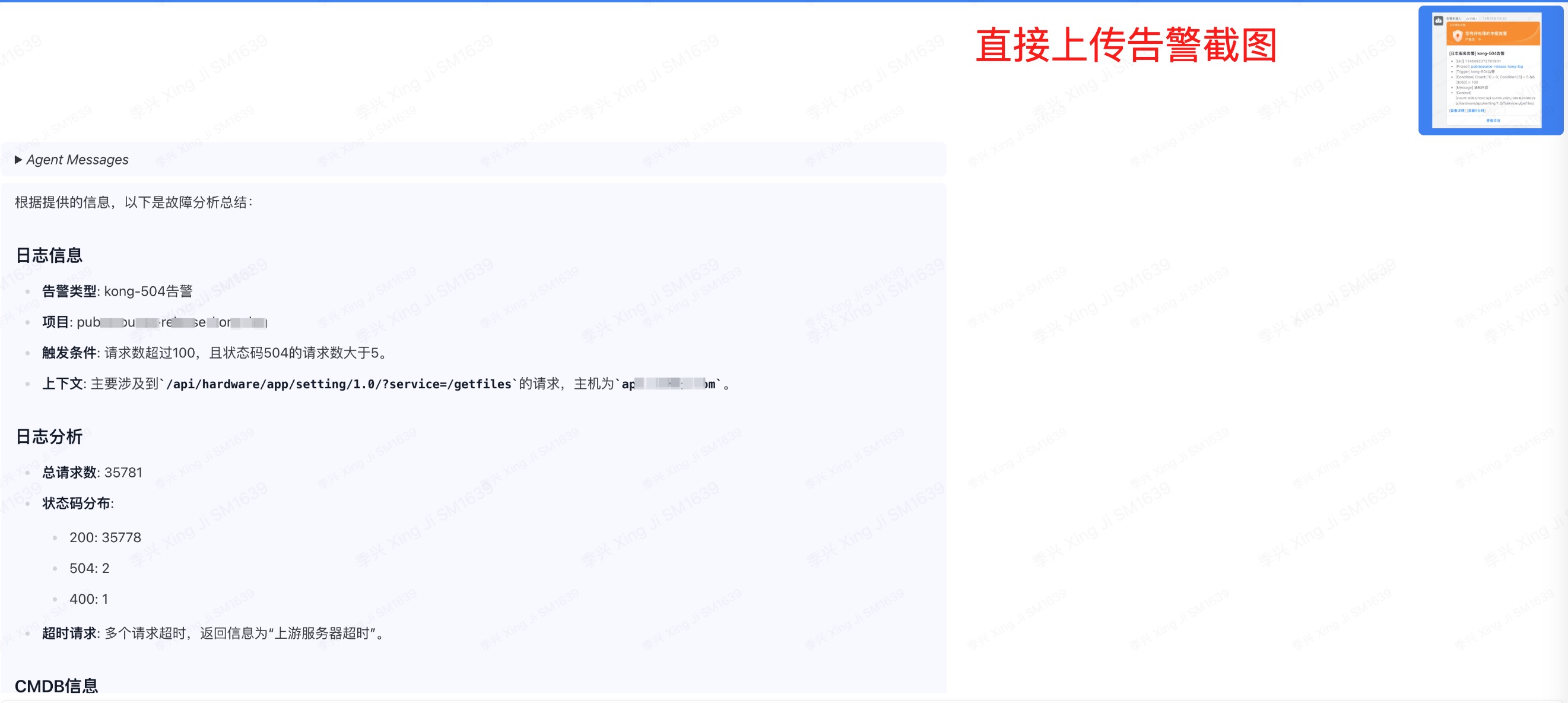

- 排错时参考,比如该服务报504错误,马上定位到具体的中间件或者外部接口

- 不遵守配置规范,不用配置中心硬编码在代码中的情况,能被发现

- 资源收拢,当所有代码中都未出现的资源可作为下架依据

相较传统的正则匹配,大模型加持下有如下优点

- 大模型能够识别到更多的配置,正则更依赖规则,对于语言多、规范复杂、执行不严有优势

- 大模型能够用简单的提示词,格式化、筛选输出

环境介绍

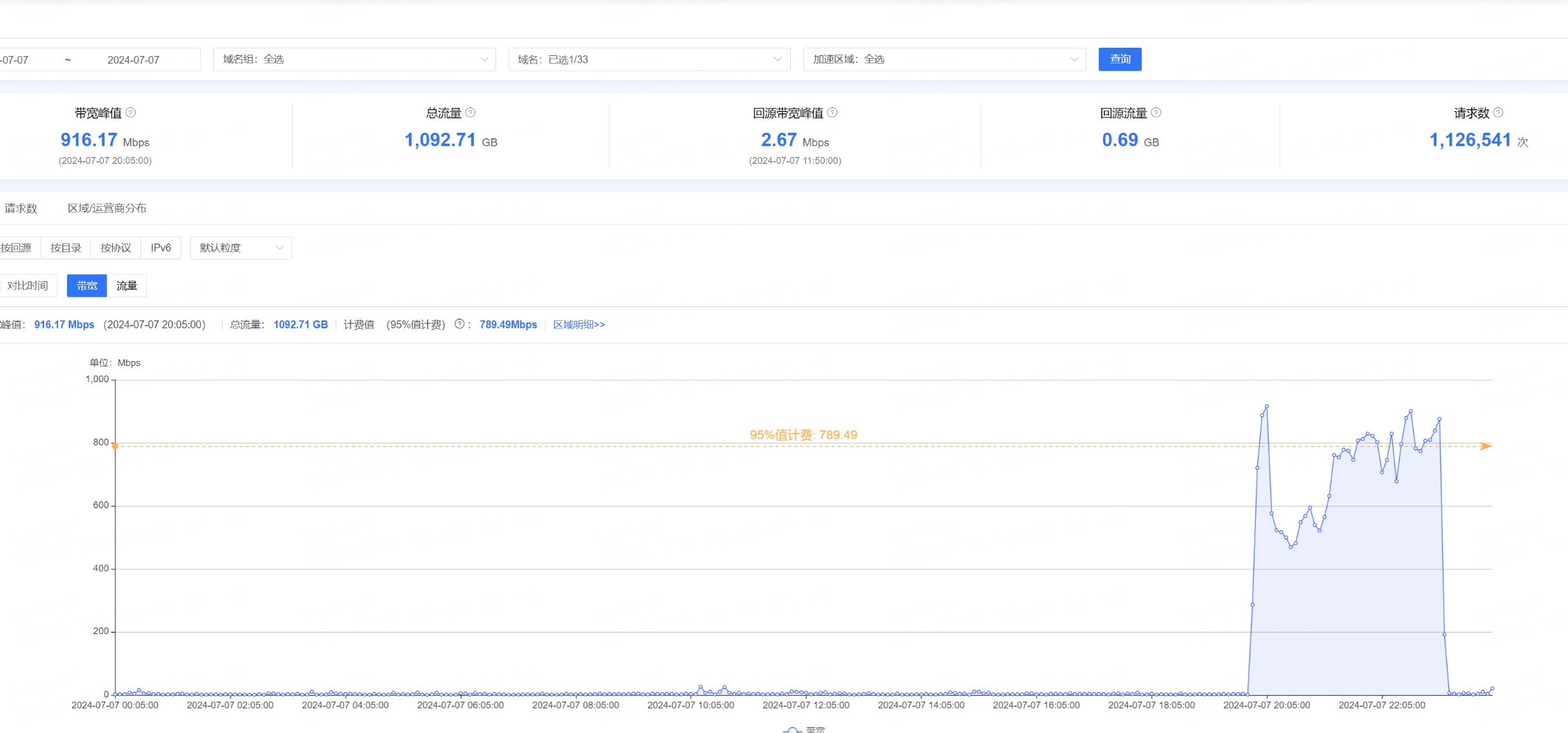

- 效果展示,使用了内部CMDB项目代码,使用了Python的Django框架,代码大小5M。项目中的配置相对分散。分析过程30秒。

- 代码、配置资产属于机密信息,故使用近期评分较高的本地模型确保安全性,Meta-Llama-3.1-8B-Instruct/Qwen2-7B-Instruct,使用vllm部署

#!/bin/bash

# 设置环境变量

export CUDA_VISIBLE_DEVICES=0,1

# 启动 vllm 服务器并将其转移到后台运行

nohup python3 -m vllm.entrypoints.openai.api_server \

--model /data/vllm/Meta-Llama-3.1-8B-Instruct \

--served-model-name llama \

--tensor-parallel-size 2 \

--trust-remote-code > llama.log 2>&1 &

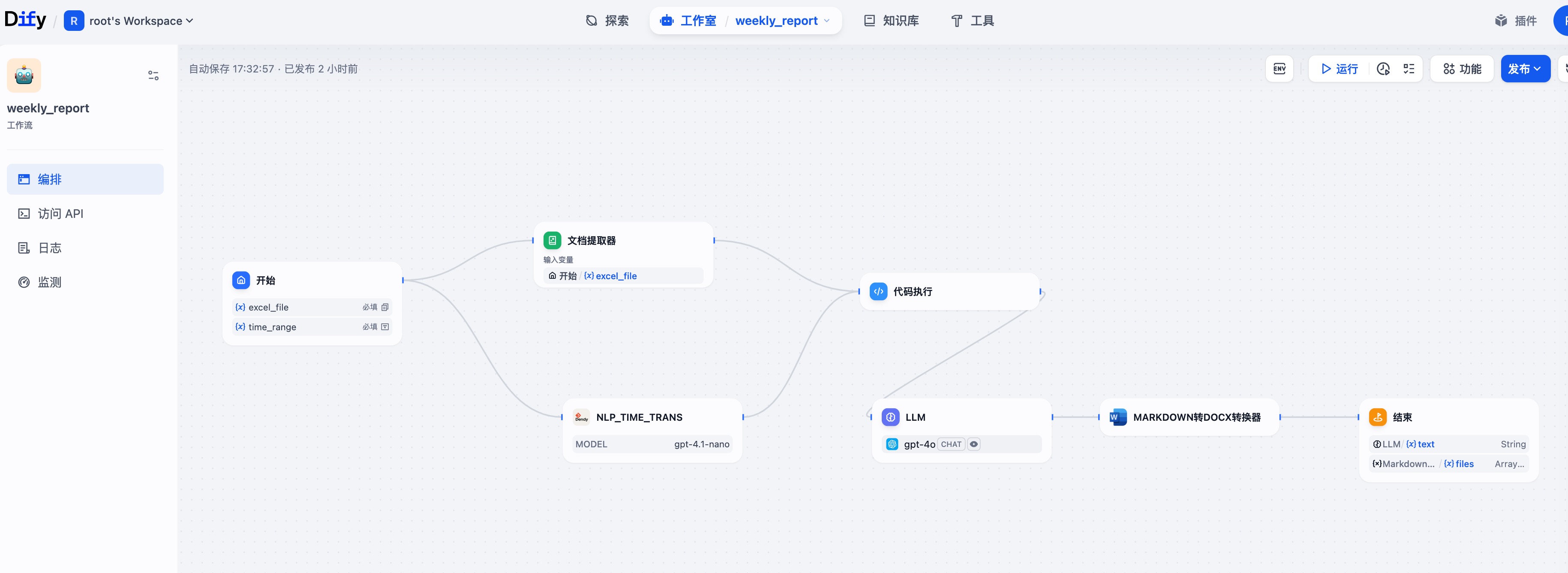

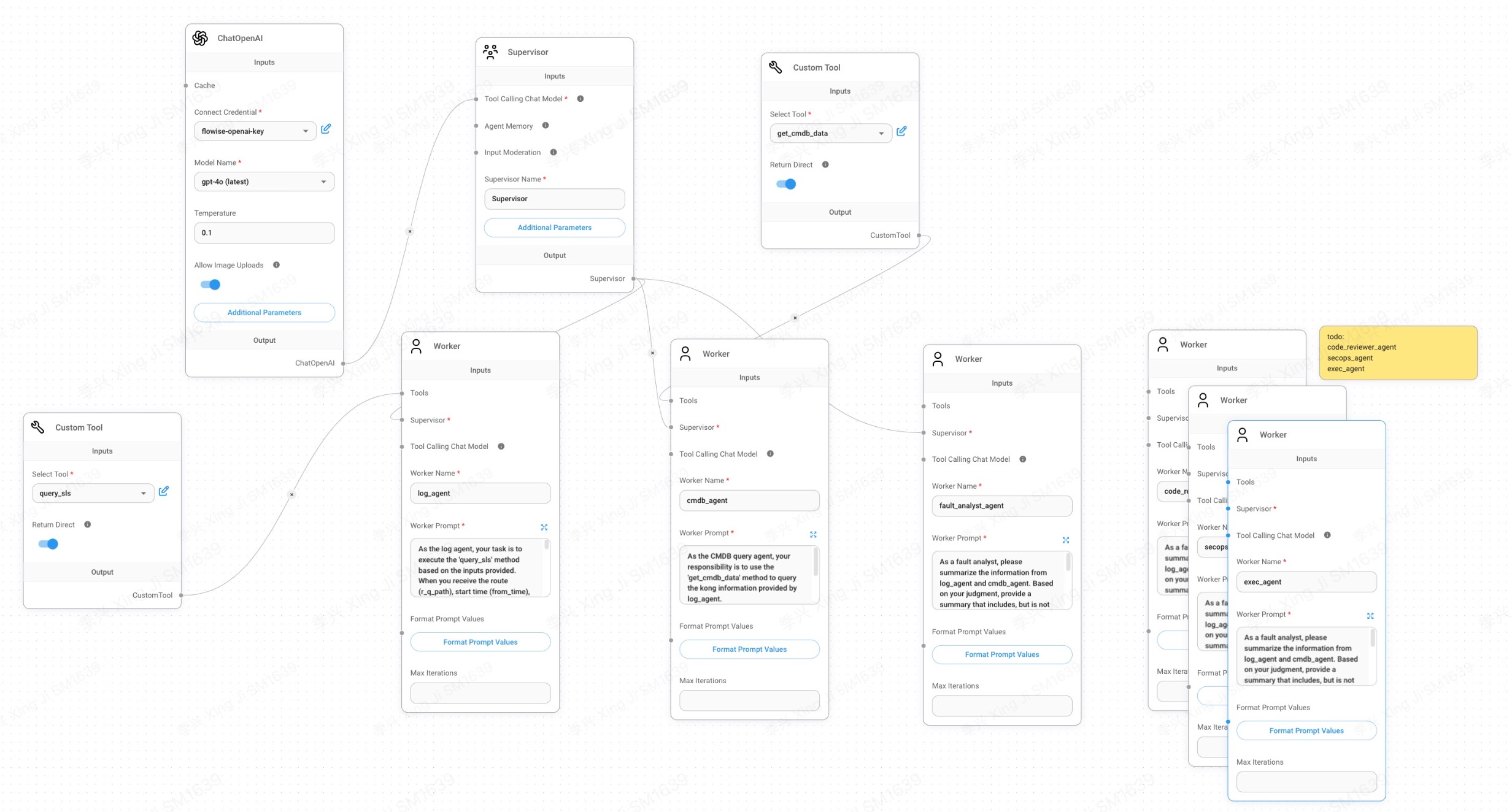

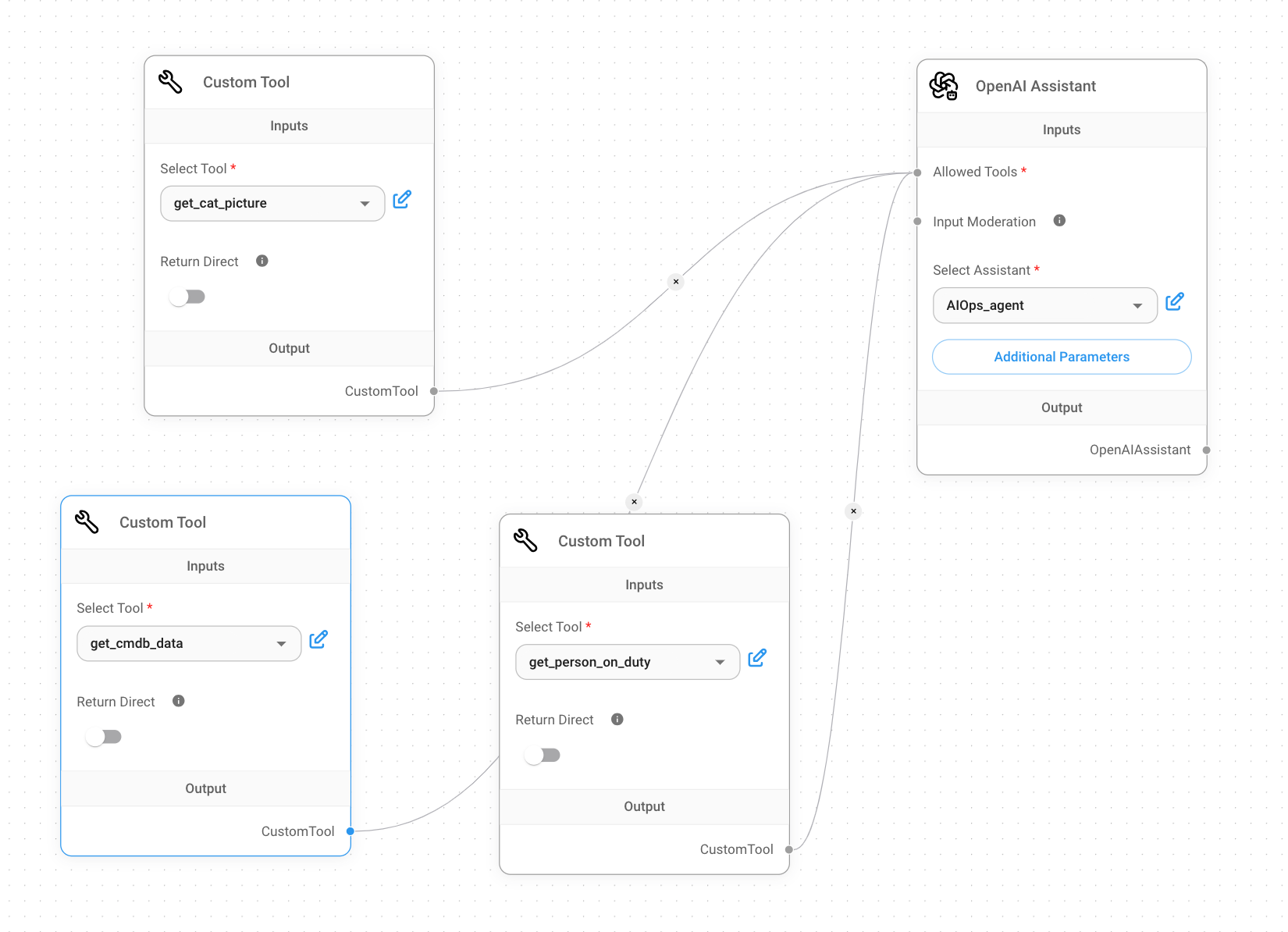

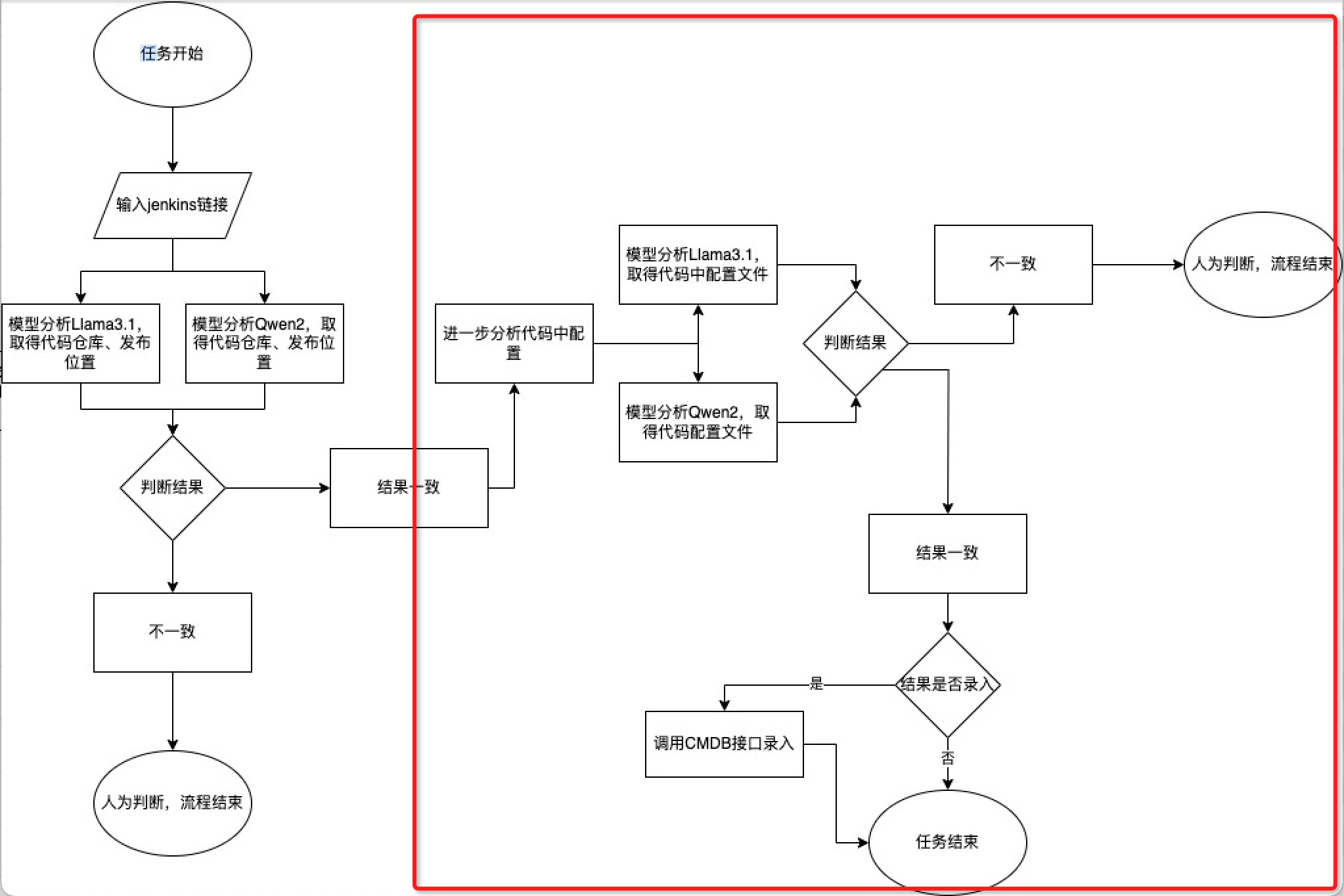

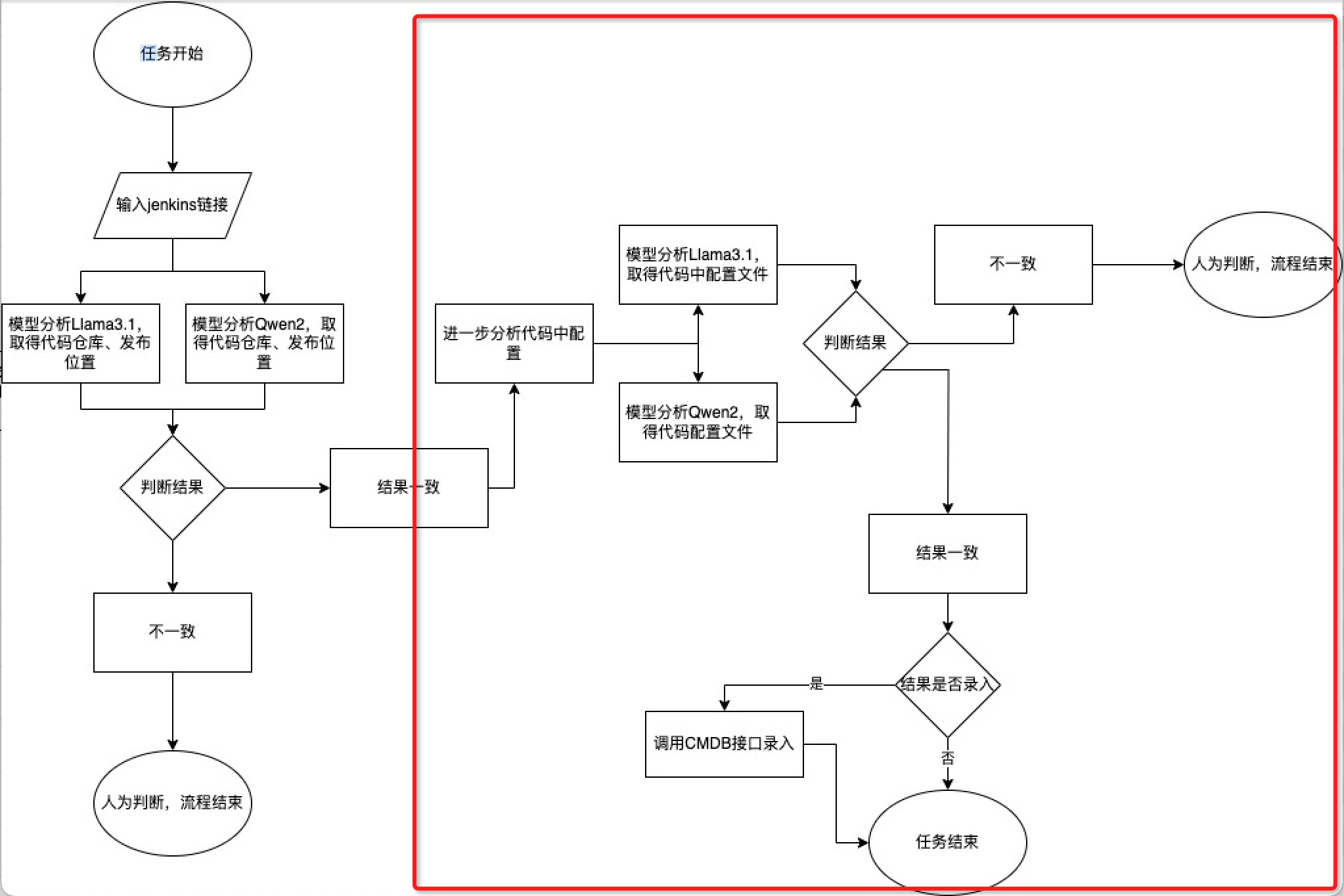

- 逻辑如下,通过多个模型分别判断,取并集后再利用模型整合

import os

import re

import requests

import time

# 定义中间件关键字的正则表达式,忽略大小写

KEYWORDS = ["mysql", "redis", "elasticsearch", "mongodb", "kafka", "rocketmq",

"rabbitmq", "emq", "mqtt", "nacos", "postgresql", "doris",

"hbase", "influxdb", "azkaban", "sls", "clickhouse",

"mse", "dataworks", "neo4j", "http", "gitlab", "jenkins"]

PATTERN = re.compile(r'\b(?:' + '|'.join(KEYWORDS) + r')\b', re.IGNORECASE)

def read_files(directory):

for root, _, files in os.walk(directory):

# 忽略 .git 文件夹

if '.git' in root:

continue

for file in files:

file_path = os.path.join(root, file)

with open(file_path, 'r', encoding='utf-8', errors='ignore') as f:

content = f.readlines()

yield file_path, content

def extract_context(content, file_path):

results = []

for i, line in enumerate(content):

if PATTERN.search(line):

start = i # 从匹配到的行开始

end = min(i + 11, len(content)) # 包含匹配行及其下方10行

snippet = "".join(content[start:end]).strip()

results.append(f"文件路径: {file_path}\n{snippet}")

return results

def write_to_file(directory, contexts):

output_file = os.path.join(directory, 'matched_content.txt')

with open(output_file, 'w', encoding='utf-8') as f:

for context in contexts:

f.write(context + '\n' + '=' * 50 + '\n')

return output_file

def send_to_model(url, model_name, prompt, content):

headers = {"Content-Type": "application/json"}

data = {

"model": model_name,

"temperature": 0.2,

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"{prompt}\n\n{content}"}

]

}

try:

response = requests.post(url, headers=headers, json=data)

response.raise_for_status()

except requests.RequestException as e:

print(f"Request to model failed: {e}")

return None

response_json = response.json()

if 'choices' not in response_json:

print(f"Model response does not contain 'choices': {response_json}")

return None

return response_json['choices'][0]['message']['content']

def write_individual_results(directory, results, model_name):

output_file = os.path.join(directory, f'{model_name}_results.txt')

with open(output_file, 'w', encoding='utf-8') as f:

for result in results:

f.write(result + '\n' + '=' * 50 + '\n')

return output_file

def combine_and_summarize(directory, llama_file, qwen_file, qwen_url):

combined_content = ""

# 读取llama和qwen的结果文件

with open(llama_file, 'r', encoding='utf-8') as f:

combined_content += f.read()

with open(qwen_file, 'r', encoding='utf-8') as f:

combined_content += f.read()

# 使用qwen模型进行汇总处理

summary_prompt = """

1. 删除包含“配置信息未提供”等无用信息的部分。

"""

result_summary = send_to_model(qwen_url, "qwen", summary_prompt, combined_content)

if result_summary:

summary_file = os.path.join(directory, 'final_summary_combined.txt')

with open(summary_file, 'w', encoding='utf-8') as f:

f.write(result_summary)

print(f"汇总结果保存至: {summary_file}")

else:

print("汇总处理失败")

def main(directory):

start_time = time.time()

all_contexts = []

for file_path, content in read_files(directory):

contexts = extract_context(content, file_path)

all_contexts.extend(contexts)

# 将匹配到的内容写入文件

matched_file = write_to_file(directory, all_contexts)

results_llama = []

results_qwen = []

with open(matched_file, 'r', encoding='utf-8') as f:

content = f.read()

analysis_prompt = """

1. Ignore lines starting with #, //, /**, or <!--.

2. Exclude commented lines.

3. Extract configuration info for: MySQL, Redis, Elasticsearch, MongoDB, Kafka, RocketMQ, RabbitMQ, EMQ, MQTT, Nacos, PostgreSQL, Doris, HBase, InfluxDB, Azkaban, SLS, ClickHouse, MSE, DataWorks, Neo4j, HTTP, HTTPS, GitLab, Jenkins.

4. Focus on URLs, usernames, passwords, hosts, ports, and database names.

5. Extract the following attributes:

- Username

- Password

- Host

- Port

- Database Name

- URL or Connection String

6. Look for configuration patterns like key-value pairs and environment variables.

7. Ensure extracted values are not in commented sections.

8. Extract all distinct configurations.

9. Handle different configuration formats (JSON, YAML, dictionaries, env variables).

10. Delete sections containing “**配置信息未直接提供**” or similar useless content.

"""

# 分别调用llama和qwen模型

result_llama = send_to_model("http://1.1.1.1:8000/v1/chat/completions", "llama", analysis_prompt, content)

result_qwen = send_to_model("http://1.1.1.1:8001/v1/chat/completions", "qwen", analysis_prompt, content)

if result_llama:

results_llama.append(result_llama)

if result_qwen:

results_qwen.append(result_qwen)

# 分别保存llama和qwen的结果到不同文件

llama_file = write_individual_results(directory, results_llama, "llama")

qwen_file = write_individual_results(directory, results_qwen, "qwen")

# 汇总llama和qwen的结果

combine_and_summarize(directory, llama_file, qwen_file, "http://1.1.1.1:8001/v1/chat/completions")

end_time = time.time()

total_duration = end_time - start_time

print(f"总耗时: {total_duration:.2f} 秒")

if __name__ == "__main__":

main("/Users/jixing/PycharmProjects/AIOps-utils/Athena_Legacy")